Controllable Probabilistic Semantic Image Inpainting

Abstract

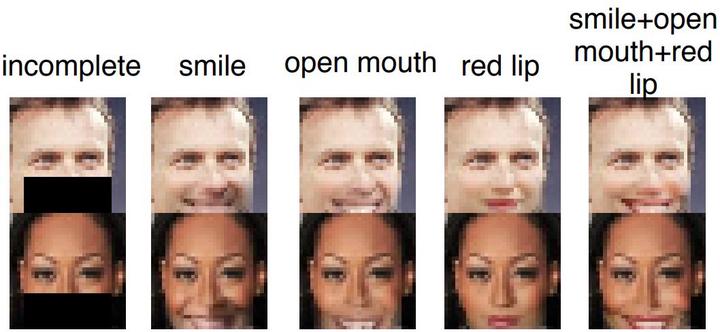

We develop a method for user-controllable semantic image inpainting: Given an arbitrary set of observed pixels, the unobserved pixels can be imputed in a user-controllable range of possibilities, each of which is semantically coherent and locally consistent with the observed pixels. We achieve this using a deep generative model bringing together: an encoder which can encode an arbitrary set of observed pixels, latent variables which are trained to represent disentangled factors of variations, and a bidirectional PixelCNN model. We experimentally demonstrate that our method can generate plausible inpainting results matching the user-specified semantics, but is still coherent with observed pixels. We justify our choices of architecture and training regime through more experiments.